This is a text version of these slides for an easier consumption, I recommend those if you have time!

Part 1: The Review Process - A Reality Check

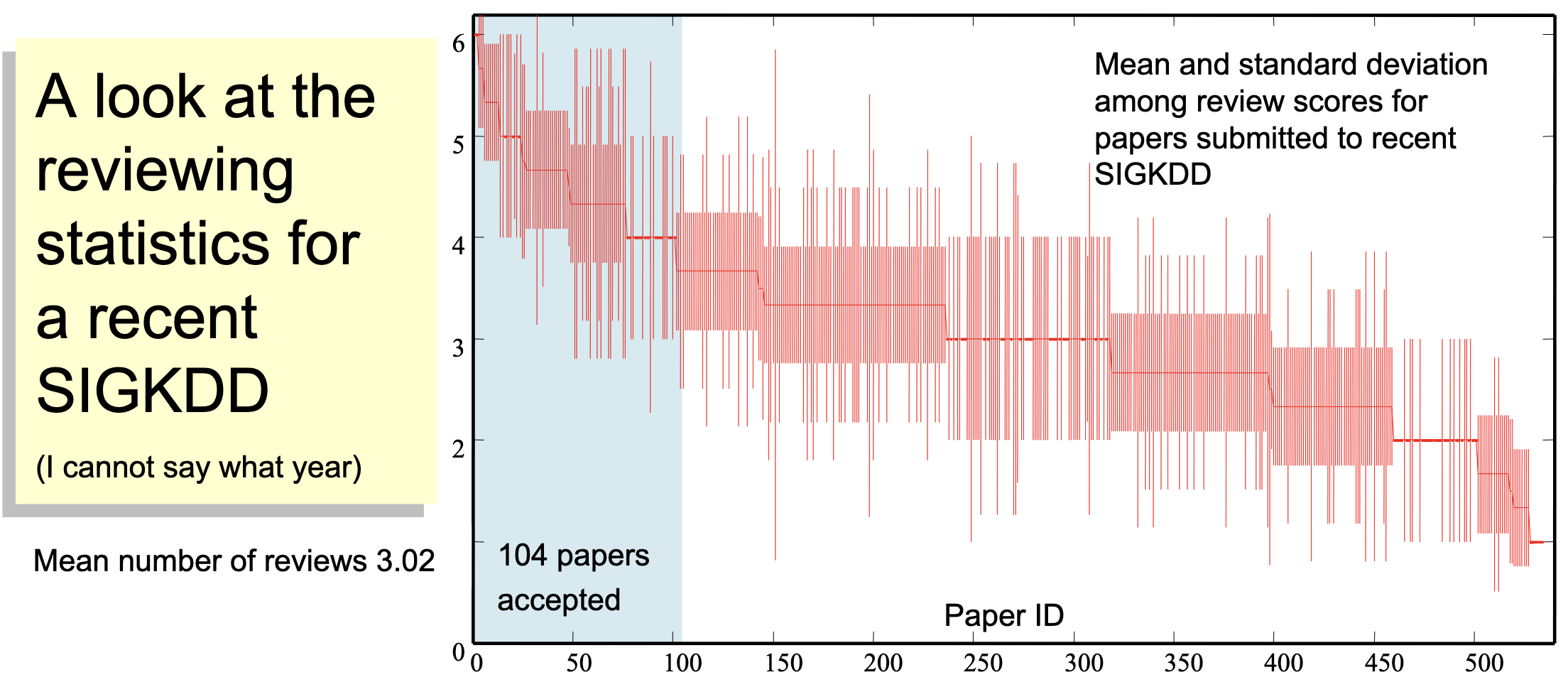

Let’s start with a dose of reality: the review process for top-tier conferences like SIGKDD is, to put it mildly, imperfect.

The Curious Case of Srikanth Krishnamurthy:

A famous anecdote illustrates this perfectly. In 2004, a student of Srikanth Krishnamurthy submitted a paper to MobiCom. They decided to change the title and resubmitted, accidentally creating two submissions of essentially the same paper. One version was rejected with scores of 1, 2, and 3. The other was accepted with scores of 3, 4, and 5!

What Does This Mean?

- The variance in reviewer scores is much larger than the difference in mean scores between accepted and rejected papers near the acceptance/rejection borderline.

- To halve the standard deviation, we’d need to quadruple the number of reviews!

- Statistically, it’s highly probable that worthy papers are rejected in favor of less worthy ones.

The Good News:

The good news is that small improvements in your paper can significantly increase your chances of acceptance. If you’re on the borderline, convincing just one reviewer to raise their score by a single point can make the difference between rejection and acceptance.

The Takeaway: The review process is a bit of a lottery. Our goal is to make our paper as strong as possible to maximize our chances of getting a winning ticket.

Part 2: Writing a SIGKDD Paper

Now, let’s get to the heart of the matter: writing a paper that stands out.

Finding Problems and Data

What Makes a Good Research Problem?

A good research problem has the following characteristics:

- Importance: Solving it should have a real-world impact (making money, saving lives, helping people learn, etc.).

- Real Data: You need to be able to get your hands on real data (analyzing the DNA of the Loch Ness Monster is interesting, but…).

- Incremental Progress: Choose problems where you can make incremental progress. All-or-nothing problems can be too risky, especially for early-career researchers.

- Clear Metric for Success: You need to know when you’re making progress. Define a clear, measurable metric for success.

Sources of Problems:

- Domain Experts: Collaborate with experts in other fields (biology, medicine, anthropology, etc.). They have problems, and you have data mining skills. It’s a win-win!

- Read, Read, Read: The best way to find problems is to read lots of papers, both in SIGKDD and elsewhere.

- Latent Needs: Don’t just ask domain experts what they want. Try to understand their latent needs – the problems they might not even realize they have.

Example (Latent Need):

A biologist asked for help with a sampling/estimation problem. After an hour of discussion, it became clear that the quantity didn’t need to be estimated; it could be computed exactly! Understanding the underlying need led to a much better solution.

Extending Existing Ideas:

A good way to generate research ideas is to take an existing idea (call it “X”) and extend it:

- Make it more accurate (statistically significantly).

- Make it faster (usually by an order of magnitude).

- Make it an anytime algorithm.

- Make it an online (streaming) algorithm.

- Make it work for a different data type.

- Make it work on low-powered devices.

- Explain why it works so well.

- Make it work for distributed systems.

- Apply it in a novel setting.

- Remove a parameter or assumption.

- Make it disk-aware.

- Make it simpler.

Examples:

- “We took the Nearest Neighbor algorithm and made it an anytime algorithm”

- “We applied motif discovery, which is useful for DNA, to time series data”

- “We adapted a bottom-up algorithm for online settings”

Framing the Problem

Clear Problem Statement:

Many papers lack a clear problem statement. Can you state your research contribution in a single sentence? Examples:

- “X is good for Y (in the context of Z).”

- “X can be extended to achieve Y (in the context of Z).”

- “An X approach to the problem of Y mitigates the need for Z.”

If a reviewer can’t form such a sentence after reading your abstract, your paper is in trouble.

Falsifiability:

Your research statement should be falsifiable. That means there should be a way to prove it wrong.

- Bad: “To the best of our knowledge, this is the most sophisticated subsequence matching solution mentioned in the literature.” (How can you prove this wrong?)

- Good: “Quicksort is faster than bubblesort.” (You can test this.)

- Bad: “We can approximately cluster DNA with DFT.” (What does “approximately” mean? Any random arrangement could be considered a “clustering.“)

- Good: “We improve the mean time to find an embedded pattern by a factor of ten.” (This is measurable and falsifiable.)

From Problem to Data

Once you have a problem, you need data. And you need it early in the process, not a few days before the deadline!

Why Real Data Matters:

- Credibility: Real data significantly increases your paper’s chances of acceptance.

- Better Research: Real data prevents you from converging on unrealistic solutions.

- Feedback Loop: Early experience with real data can inform and refine your research question.

Is Synthetic Data Okay?

There’s a huge difference between:

- “We wrote a Matlab script to create random trajectories.”

- “We glued tiny radio transmitters to the backs of Mormon crickets and tracked their trajectories.”

The second is real data. The first is synthetic.

Why is Synthetic Data Bad?

- Conflict of Interest: You’re creating the data that validates your algorithm. It’s like grading your own homework.

- Hidden Bias: Even if you don’t consciously bias the data, you might do so unconsciously.

- Contradiction: If you claim your problem is important, but you only use synthetic data, reviewers will wonder why there’s no real data.

Example (Synthetic Data Leading to Contradiction):

A paper claimed that processing large datasets is increasingly important, but then admitted to using synthetic data due to a lack of publicly available large datasets. This is a red flag.

Real Data Motivates Clever Algorithms:

Real data often reveals challenges and constraints that you wouldn’t think of when working with synthetic data. This can lead to more innovative and relevant algorithms.

Example:

Instead of saying, “If I rotate my hand-drawn apples, I need a rotation-invariant algorithm,” use real images of fly wings. Even in a structured domain like wing imaging, rotation invariance is necessary because the robots handling the wings can’t guarantee consistent orientation.

Where to Get Good Data:

- Domain expert collaborators.

- Formal data mining archives (UCI Knowledge Discovery in Databases Archive, UCR Time Series and Shape Archive).

- General archives (Chart-O-Matic, NASA GES DISC).

- Create it yourself! (Get creative!)

Solving Problems

Now you have a problem and data. Time to solve it!

Avoid Complex Solutions:

Complex solutions are:

- Less likely to generalize.

- Easier to overfit.

- Harder to explain.

- Difficult to reproduce.

- Less likely to be cited.

Unjustified Complexity (Examples):

- A paper that combines case-based reasoning, fuzzy decision trees, and genetic algorithms, with 15+ parameters. How reproducible is that?

- A paper that claims to have the “most sophisticated subsequence matching solution,” but provides no way to verify this.

Simplicity is a Strength:

If your solution is simple, sell that simplicity. Claim it as an advantage. Your paper is implicitly claiming, “This is the simplest way to get results this good.” Make that claim explicit.

Problem-Solving Techniques:

- Problem Relaxation: If you can’t solve the original problem, make it easier. Solve the easier version, then revisit the original.

- Example: Instead of maintaining the closest pair of real-valued points in a sliding window in worst-case linear time, relax to amortized linear time, or assume discrete data, or assume infinite space.

- Looking to Other Fields: Find analogous problems in other fields (computational biology, information retrieval, etc.) and adapt their solutions.

- Example: Adapting a DNA motif-finding algorithm for time series motif discovery (SIGKDD 2002).

Eliminate Simple Ideas:

Before developing a complex solution, try simple ones. They might work surprisingly well! Your paper is implicitly claiming it’s the simplest way, you should check.

Case Study 1 (Eliminate Simple Ideas):

Classifying crop types using satellite imagery. The initial goal was to develop a complex dynamic time warping (DTW) approach. But it turned out that a single line of Matlab code (summing pixel values) achieved perfect accuracy!

Case Study 2 (Eliminate Simple Ideas):

A paper on generating the “most typical” time series used a complex method. But under their metric of success, a constant line was the optimal answer for any dataset!

The Importance of Being Cynical:

Not every statement in the literature is true. Be skeptical. There are research opportunities in confirming or refuting “known facts.”

Non-Existent Problems:

Believe it or not, some papers address problems that don’t actually exist!

Example:

Many papers claim to address the problem of comparing time series of different lengths. But simply resampling the data to the same length is often sufficient and performs just as well (or better) than complex methods designed for different lengths.

Part 3: Writing the Paper (The Details)

Okay, you’ve found a good problem, gathered real data, and developed a solution. Now comes the crucial part: writing the paper.

Structure of a Good Paper:

Here’s a general outline (adapted from Hengl and Gould, 2002):

- Working Title: Start with a working title.

- Introduction: Introduce the topic, define terms (informally), and motivate the problem.

- Related Work: What’s been done before?

- Gap: What needs to be done?

- Research Questions: Formally pose your research questions.

- Background: Explain any necessary background material.

- Definitions: Introduce formal definitions.

- Algorithm/Representation: Describe your novel contribution.

- Experimental Setup: Explain your experiments and what they will show.

- Datasets: Describe the datasets used.

- Results: Summarize results with figures and tables.

- Discussion: Discuss the results, including unexpected findings and discrepancies.

- Limitations: State the limitations of your study.

- Importance: State the importance of your findings.

- Future Work: Announce directions for future research.

- Acknowledgements: Thank those who helped.

- References: Cite your sources.

Key Principle: Don’t Make the Reviewer Think!

This principle, borrowed from Steve Krug’s book on web design, is surprisingly applicable to paper writing:

- Reviewers are busy and unpaid. Don’t make them work harder than necessary.

- If you let the reader think, they might think wrong!

Make your paper as clear, concise, and easy to understand as possible.

Keogh’s Maxim:

“If you can save the reviewer one minute of their time, by spending one extra hour of your time, then you have an obligation to do so.”

This is because the author benefits much more from an accepted paper than a reviewer does from reviewing it.

The First Page is Crucial (Anchoring):

Reviewers often form an initial impression on the first page, and this impression tends to stick (a psychological phenomenon called “anchoring”).

The Introduction Should Cover:

- What is the problem?

- Why is it interesting and important?

- Why is it hard? (Why do naive approaches fail?)

- Why hasn’t it been solved before? (Or, what’s wrong with previous solutions?)

- What are the key components of your approach and results? (Include limitations.)

- Summary of Contributions: A final paragraph or subsection listing your main contributions in bullet form.

Reproducibility

Reproducibility is a cornerstone of the scientific method. It means that someone else should be able to replicate your results independently.

Why Reproducibility Matters for SIGKDD:

- Confidence: It instills confidence in reviewers that your work is correct.

- Value: It gives the (true) appearance of value.

- Citations: It can increase your citations.

Types of Non-Reproducibility:

- Explicit: Authors don’t provide data or parameter settings.

- Implicit: The work is so complex that it’s practically impossible to reproduce, or requires expensive resources.

Examples of Non-Reproducibility:

- A paper that provides no details about the datasets used, the queries selected, or the metrics used to evaluate performance.

- A paper that relies on a complex combination of algorithms (case-based reasoning, fuzzy decision trees, genetic algorithms) with many parameters.

How to Ensure Reproducibility:

- State all parameters and settings in your paper.

- Create a webpage with annotated data and code.

- Test the reproducibility yourself! Assume you lose all your files. Can you recreate your experiments using only the webpage?

Addressing Objections to Reproducibility:

- “I can’t share my data for privacy reasons.” Prove it. Can you create a synthetic dataset that has similar properties?

- “Reproducibility takes too much time and effort.” It can actually save time in the long run (when you need to revisit your work for a journal version).

- “Strangers will use your code/data to compete with you.” That’s a good thing! It means people are reading and building on your work.

- “No one else does it. I won’t get any credit for it.” It’s a way to stand out from the competition.

Writing Style and Word Choice

Parameters (are bad):

- Minimize the number of parameters in your algorithm.

- For every parameter, show how to set a good value, or show that the exact value doesn’t matter much.

Unjustified Choices (are bad):

- Explain and justify every choice, even if it seems arbitrary.

- Bad: “We used single linkage clustering…” (Why?)

- Good: “We experimented with single, group, and complete linkage, but found little difference, so we report only single linkage…”

Important Words/Phrases:

- Optimal: Does not mean “very good.” It means the best possible solution.

- Proved: Does not mean “demonstrated.” Experiments rarely prove anything.

- Significant: Be careful not to confuse the informal meaning with the statistical meaning.

- Complexity: Has two meanings (intricacy and time complexity). Be clear.

- It is easy to see: A cliché. Is it really easy to see?

- Actual: Almost always unnecessary.

- Theoretically: Almost always unnecessary.

- etc.: Only use it if the remaining items on the list are obvious.

- Correlated: In informal language, this implies a relationship. In stats, it implies a linear relationship.

- (Data) Mined: Don’t over use it. “We clustered/classified the data.” is more descriptive.

- In this paper: Where else would it be? Remove it.

DABTAU (Define Acronyms Before They Are Used):

Don’t assume reviewers know every acronym.

Use All the Space Available:

Don’t leave large blank spaces. Reviewers might think you could have included more experiments, discussion, or references. A good 9-page paper is often a carefully pared-down 12 or 13-page paper.

Color in the Text:

You can use color to emphasize points, link text to figures, etc. But be mindful that some readers might not see the color version.

Avoid Weak Language:

- Bad: “…it might fail to give accurate results.”

- Good: “…it has been shown by [7] to give inaccurate results.” (or) “…it will give inaccurate results, as we show in Section 7.”

- Bad: “In this paper we attempt to…”

- Good “In this paper, we show, for the first time…”

Avoid Overstating:

- Bad: “We have shown our algorithm is better than a decision tree.”

- Good: “We have shown our algorithm can be better than decision trees, when the data is correlated.”

Use the Active Voice:

- Bad: “Experiments were conducted…”

- Good: “We conducted experiments…”

Avoid Implicit Pointers:

Be careful with words like “it,” “this,” and “these.” Make sure it’s clear what they refer to.

Avoid “Laundry List” Citations:

Don’t just list a bunch of references without explaining their relevance. It looks lazy and suggests you haven’t read the papers.

Motivating Your Work

Address Alternative Solutions:

If there are other ways to solve your problem, explain explicitly why your approach is better. Don’t assume reviewers will automatically know.

Cite Other Papers Forcefully:

Use quotes from other papers to strengthen your arguments.

- Good: “However, no matter what representation is used, rotation invariance seems to be uniquely difficult to handle. For example [20] notes ‘rotation is always something hard to handle compared with translation and scaling’.”

- Bad: “Paper [20] notes that rotation is hard to deal with.”

Convince Reviewers Your Work is Original:

- Do a thorough literature search.

- Use mock reviewers.

- Explain why your work is different from previous work.

Making Good Figures

Good figures are crucial for conveying your ideas clearly and concisely.

Principles for Good Figures:

- Think about the point you want to make. Should it be a figure, a table, or just text?

- Use color (but don’t rely on it).

- Use linking (connecting the same data in different views).

- Use direct labeling (label elements directly on the figure, instead of using a key).

- Write meaningful captions.

- Embrace minimalism (omit needless elements).

- Take pride in your work!

Common Problems with Figures:

- Too many patterns on bars.

- Using both different symbols and different lines (redundant).

- Too many shades of gray.

- Lines too thin (or thick).

- Using 3D bars for only two variables (unnecessary).

- Lettering too small.

- Symbols too small or difficult to distinguish.

- Redundant titles.

- Using gray symbols or lines.

- Key outside the graph.

- Unnecessary numbers in the axis.

- Multiple colors mapping to the same shade of gray.

- Unnecessary shading in the background.

- Using bitmap graphics (instead of vector graphics).

- General carelessness.

Top Ten Avoidable Reasons Papers Get Rejected (with Solutions)

Here’s a summary of the most common (and avoidable) reasons papers get rejected:

-

Out of Scope: The paper doesn’t fit the conference’s topic.

- Solution: Frame your problem as a KDD problem, reference relevant SIGKDD papers, and use common KDD datasets and evaluation metrics.

-

Not Reproducible: Reviewers can’t replicate your results.

- Solution: Create a webpage with all data and code, and test the reproducibility yourself.

-

Too Similar to Your Last Paper: It looks like you’re “double-dipping.”

- Solution: Reference your previous work and clearly explain how this paper extends it.

-

Unacknowledged Weakness: You don’t address a significant limitation of your approach.

- Solution: Explicitly acknowledge weaknesses and explain why the work is still useful.

-

Unfairly Diminishing Others’ Work: You’re overly critical of previous work.

- Solution: Be respectful and objective. Consider sending a preview to the authors you’re critiquing.

-

Easier Solution Exists/Not Compared to X: You haven’t considered simpler or alternative approaches.

- Solution: Include simple strawmen and explain why other methods won’t work.

-

Missing References/Idea Already Known: You haven’t done a thorough literature search.

- Solution: Do a detailed literature search, use mock reviewers, and consider a longer tech report for extensive related work.

-

Too Many Parameters/Arbitrary Choices: Your algorithm has too many parameters or unjustified choices.

- Solution: Minimize parameters, explain how to set them, or show that the exact values don’t matter. Justify every choice.

-

Uninteresting/Unimportant Problem: Reviewers don’t see the value of your work.

- Solution: Use real data, collaborate with domain experts, explicitly state the problem’s importance, and estimate the value of your solution.

-

Careless Writing/Typos/Unclear Figures: The paper is poorly written and presented.

- Solution: Finish writing well ahead of time, use mock reviewers, and take pride in your work!

Conclusion

Getting published in a top-tier conference like SIGKDD is challenging, but it’s definitely achievable. By taking a systematic approach, being self-critical, and focusing on clarity, reproducibility, and impact, you can significantly increase your chances of success. Remember, think like a reviewer! Put yourself in their shoes and make your paper as easy as possible for them to understand and appreciate. Good luck!