TL;DR

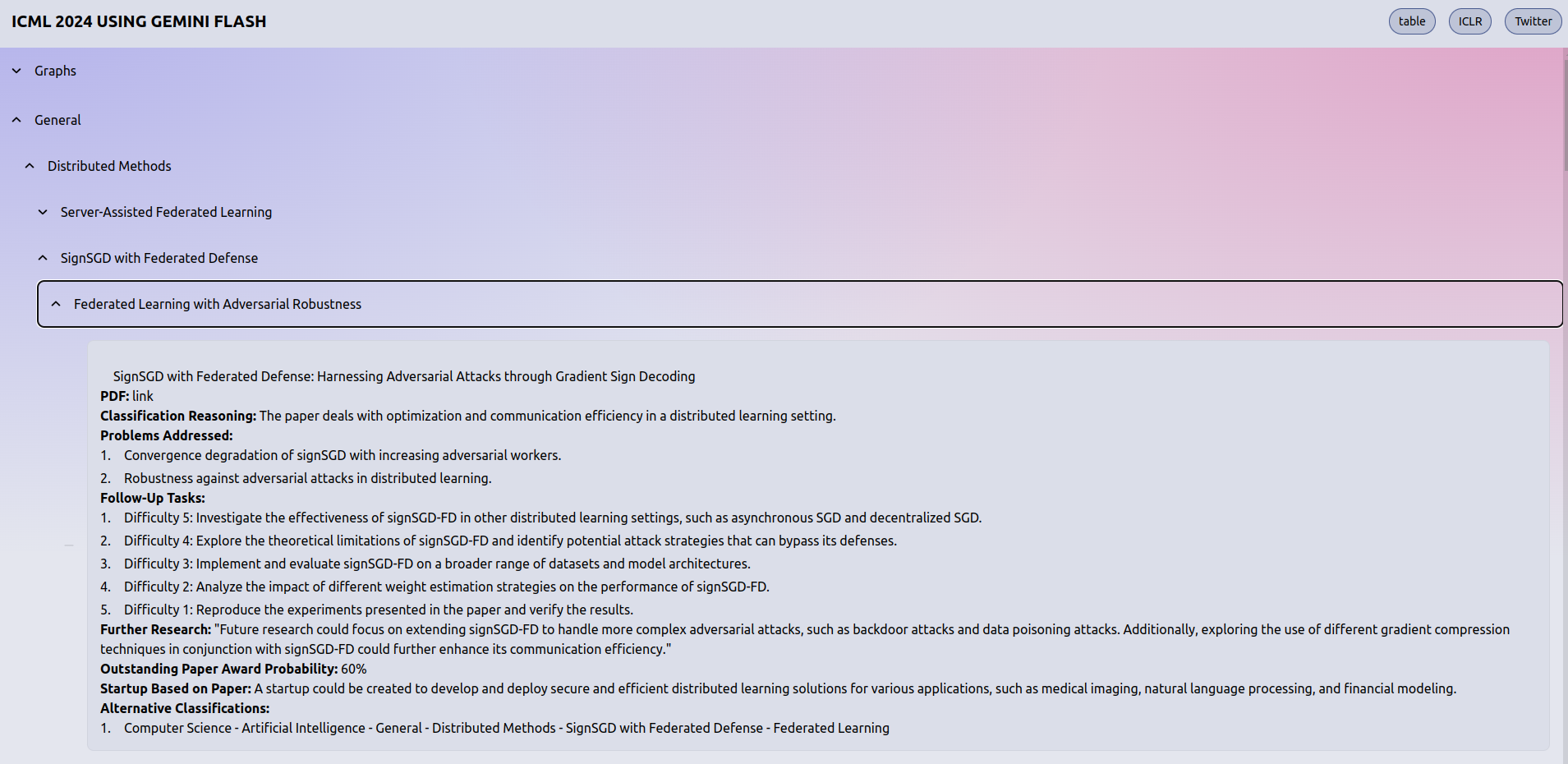

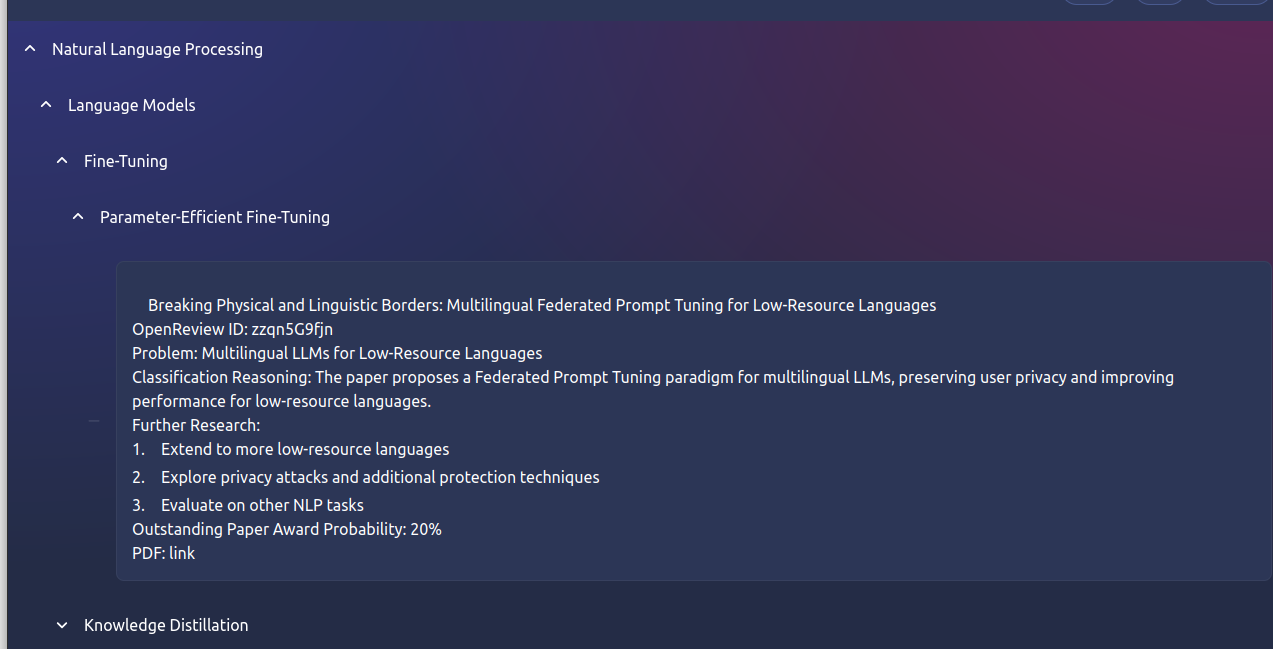

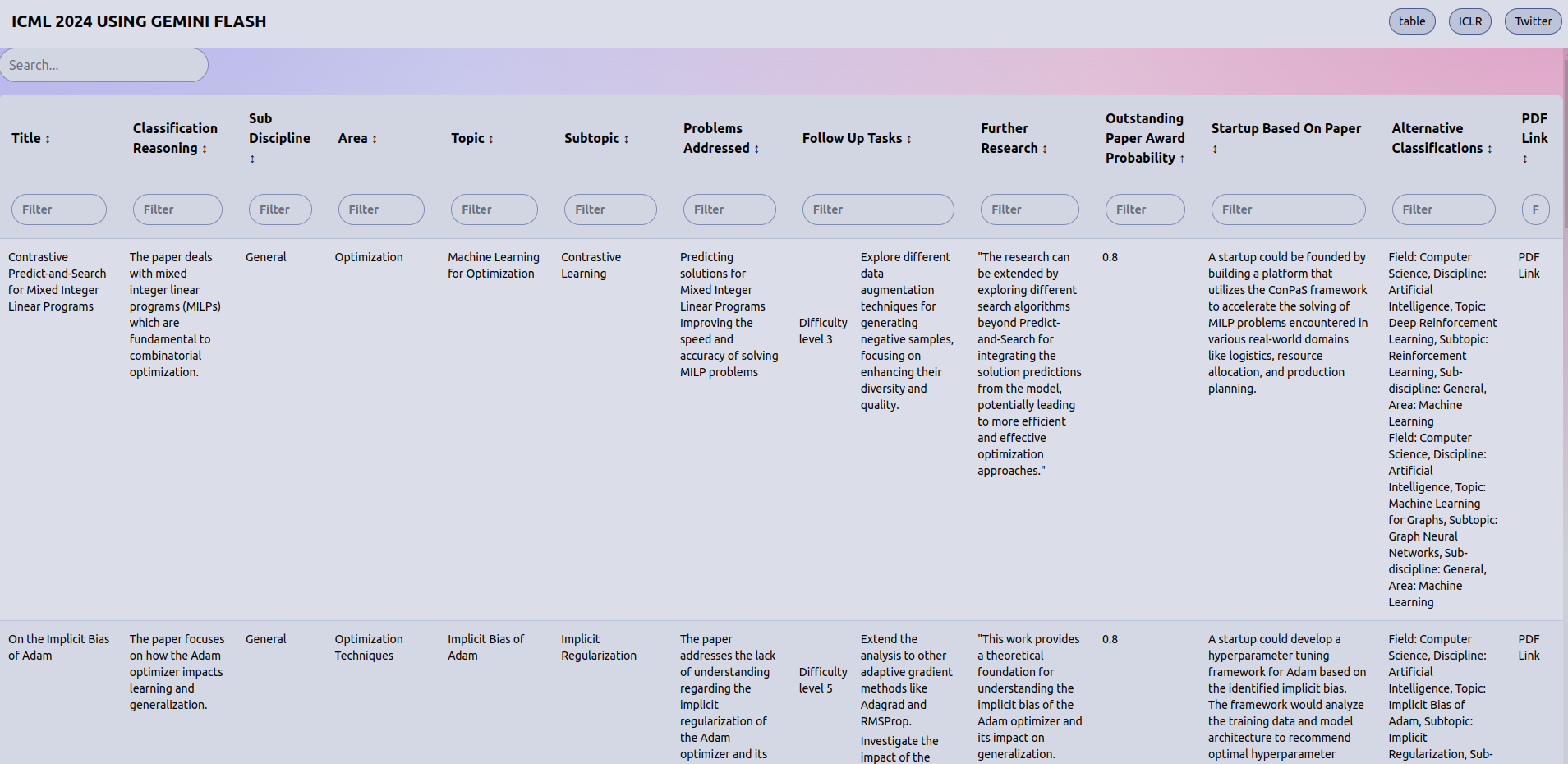

I built https://arxiv-cat.eamag.me/icml_gemini_flash, a website that classifies all International Conference on Machine Learning 2024 papers into different categories. I tried three approaches:

- Predict 4 categories and sub category levels at the same time using Cohere R+

- Predict one category at a time and adjust a prompt to have a choice from sub categories using Gemini Flash

- Create embeddings for each paper using Qwen 2 and cluster them together using HDBSCAN recursively, label these clusters automatically.

Unfortunately, it doesn’t quite work as expected and is a bit difficult to navigate. I want to find out how to improve it, or if an automated paper classification using LLMs is not what LLMs can do and requires some form of reasoning

Motivation

I want to increase technological progress using AI, either via research or applying research to real life. However, I don’t know what many topics and what the low-hanging fruits are that I can start contributing to right now. For example, how to answer the question “where to get started in the Mechanistic Interpretability”.

The usual path is to do a PhD and have a good advisor who already has a lot of knowledge about topics and is up-to-date with the latest trends. This is what mentors are supposed to do: help to formulate good questions and good experiments. However, I’ve heard many stories from PhD friends, and currently I want to find an alternative path.

I read this article about solo research, which inspired me, but I also understand the difficulties. I was also very impressed by Neel’s post on 200 concrete open problems in interpretability, and I wondered why we don’t have such a list for every field.

But then I thought, what are these fields and topics within them? Authors put simple classifications or tags on their papers when submitting to journals, but wouldn’t it be nice to do the same for all papers in one place, similar to what Papers with Code does for code? And can we do it automatically using LLMs?

Approach

ICLR

I decided to start within a constrained field, and the ICLR 2024 conference seemed like a good fit. The (incomplete) result can be found at https://arxiv-cat.eamag.me/iclr.

I used the OpenReview API to get all papers and reviews with comments, and simple PDF parsing to remove content that shouldn’t matter for classification and would just consume tokens, like everything below citations.

I used the OpenReview API to get all papers and reviews with comments, and simple PDF parsing to remove content that shouldn’t matter for classification and would just consume tokens, like everything below citations.

I decided to use Cohere’s Command R Plus because it was one of the only free available models in Germany with a large context window in early May. I included everything about the paper in the prompt with instructions (“here’s the paper, here are reviews, here’s an example output, your task is to classify without repeating”) with some prompt engineering. I stored the results in a SQLite database, handling issues like non-JSON format output, and removing tokens like “\x00” that appeared in the papers.

I quickly ran out of the 1,000 free requests, and visualized the data using SvelteKit and Skeleton. However, the results were not as expected. I had hoped to see nice hierarchical category trees, but the categories were all mixed up, repeating across different levels, and not very usable for tasks like “what is happening right now in Graph Neural Networks for drug design?”

I tried to clean up the categories using LLMs again (“here are the categories, combine them into similar ones”), but either my prompt engineering skills weren’t up to par, or the task was too confusing for the model without the full paper context.

After a short break reformulating the task, I decided to continue with ICML while also focusing more on finding open problems instead of just classification.

ICML using Gemini

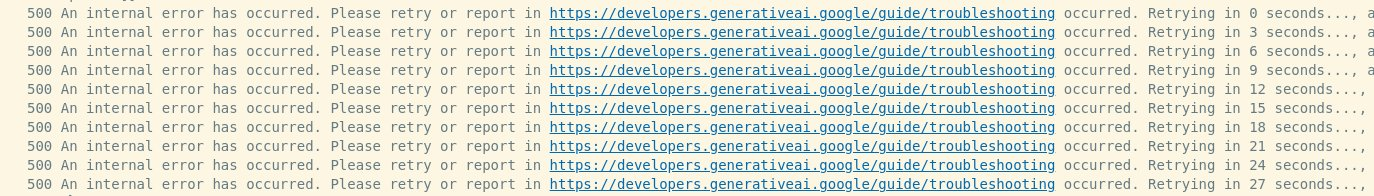

I had hoped to use Gemini 1.5 Pro with forced JSON output, but the free tier is not available in Europe. Even though it said “free of charge” when creating an API key without connecting a billing account, my other billing account got charged unexpectedly.

So I switched to Gemini Flash, where the JSON output schema is just a suggestion. I had to retry requests several times to get JSON output instead of other formats. This model was also unreliable, and I couldn’t get any support, which was disappointing.

My prompt strategy involved calling the model multiple times, trying to predict one category level at a time while suggesting available categories based on the previous categorization.

Unfortunately, this approach didn’t work either, likely because I lacked initial categories (except those parsed from Papers with Code), and there was still some category overlap. The final result at https://arxiv-cat.eamag.me is not very usable for my goals of understanding research topics and open problems.

For exploration purposes, I added fields like “probability of receiving an Outstanding Paper award” and “potential startup ideas based on this paper.” The data is presented in a searchable, sortable table at https://arxiv-cat.eamag.me/datatable.

ICML (embeddings clustering)

The basic idea: it will be easier for the model to come up with labels if it can look around what labels are already there, and what other papers have similar labels. BERTopic is something similar, but I want better labels.

Here I’m creating embeddings of ICML papers using ollama qwen2 (chosen by embeddings leaderboard), and running HDBSCAN on top of it, then trying to come up with names for each cluster and sub cluster using the same LLM. I tried an approach with UMAP that Spotify did, just embeddings, only text+abstract embeddings. I tried to tune HDBSCAN parameters or use KMeans, but nothing really works: papers inside clusters are not really relevant, or split around into different clusters. I did improve the results by labeling clusters, then re-labeling them again in a second pass by providing the information about other clusters, but it’s still not good enough.

Is it some limitation of the approach? Is there a way of fine tuning the LLM to output embeddings that are clustered better? I’m not really sure now. You can see the best result I’ve got here, clusters can’t even be named correctly because the papers inside are too diverse.

Learnings

It seems we’re not quite there yet with LLMs for automated paper classification, or perhaps this task requires a level of reasoning that LLMs may never achieve. I knew about Effective Thesis and had hoped to help automate their process, but it looks like we still need manual input from experts for now.

Prompting is still a significant time sink, and automatic prompt enhancement (e.g., using other LLMs) could become an important area of research.

As with classic machine learning, it’s difficult to understand why outputs differ from expectations. But with LLMs, the black box is even larger, making it hard to influence beyond trial and error.

Good result visualization is key. Adding a tree structure made evaluation much easier for this project.

Next Steps

- Clean up and open source the code

- Get more feedback and think about e2e approach in clustering, somehow make the model create embeddings that are far enough in the space directly

- Fine-tune LLM on a paper-topic dataset

- ??? Open to more suggestions!