TL;DR

I’m building “Paper2project” tool, that aims to identify useful/relevant projects from the latest scientific papers. You can find the first ranked projects here at https://openreview-copilot.eamag.me/projects. I would like to get more feedback and contributions!

Motivation

Some people have lots of time and don’t want to waste it scrolling social media. Others want to contribute to scientific progress, but don’t know how to apply their skills, or don’t know what skills they should develop. This project aims to simplify the barrier of entry to scientific contribution by personalizing potential projects based on a person’s capabilities and interests. I’ve already tried to build a similar thing in Automated Paper Classification, but that was mostly focused on paper categories to keep track of new things in Sparse Autoencoders, for example.

Vision

Based on a person’s interests we suggest scientific projects that bring the most impact to the world. We can collect interests based on their profile from devices or through a chat. We have projects stored and query them on demand, each project is rated in several dimensions. This can be extended to a company-level research to integrate the most relevant updates and patents.

Current version scope

- Only use papers from ICLR 2025

- Main focus is on top rated papers, and

interpretability and explainable AIarea - For each paper projects are extracted based on complexity

- No coding

- High school student

- Undergraduate (main focus)

- PhD in the same field

- PhD in the another field

- Entrepreneur (converting project to a real world usage)

- Projects are filtered and ranked through an LLM agent tournament

- Top projects get a handholding step-by-step how-to guide

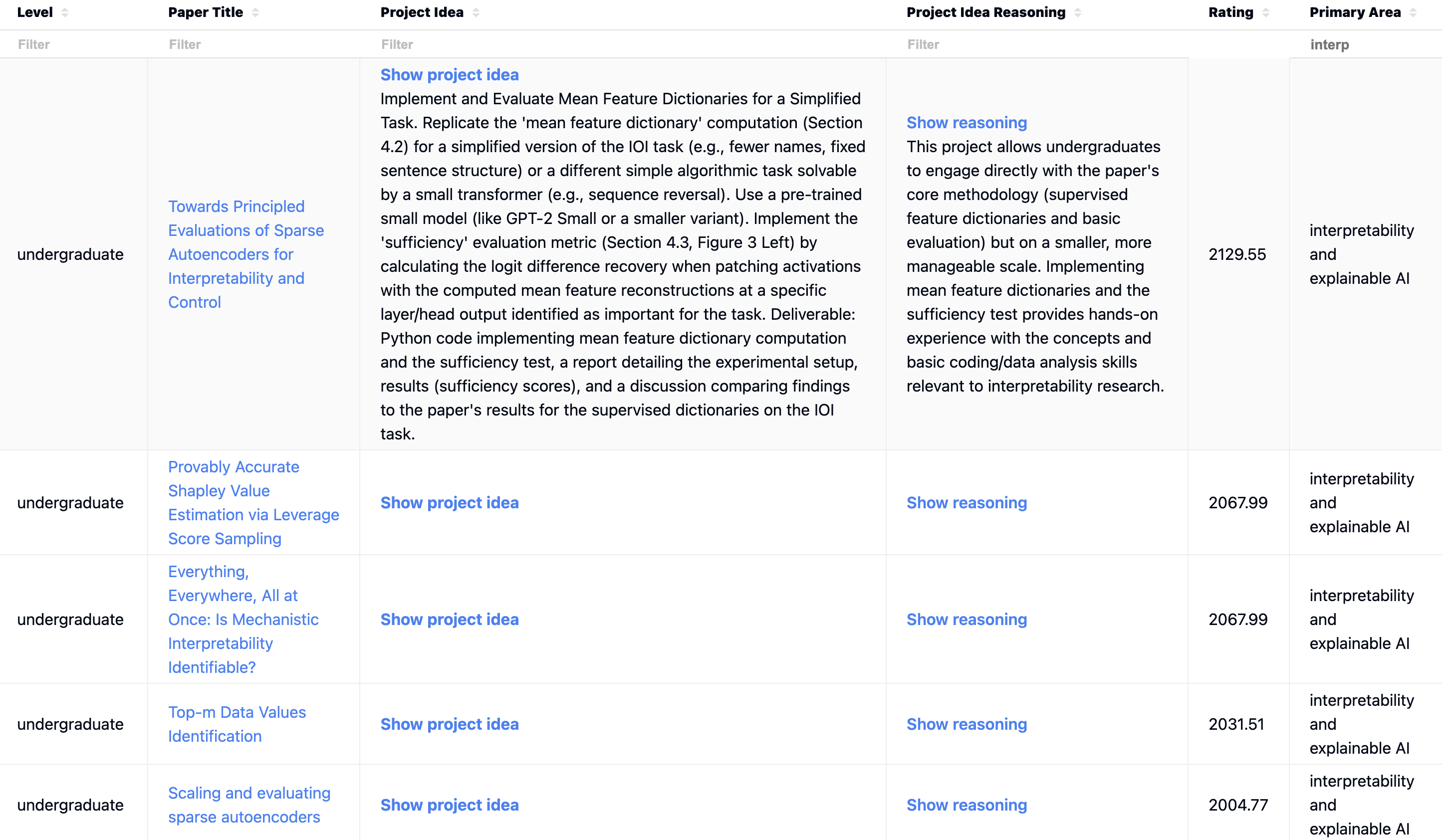

Example from https://openreview-copilot.eamag.me/projects:

Use cases

- Student: instead of a beginner project

Code a simple CNN in Pythonthey get a project for their dissertation about Sparse Autoencoders usage in explainable AI - Researcher: top rated project is relevant to their initial idea, collaboration with authors

- Entrepreneur: takes a blender procedural generation paper and creates an easy to use SaaS-like plugin

- LLM: uses latest attention improvement paper and adds it to an open source repo

Methodology

Paper extraction

Get (mostly anonymous) papers from openreview similar to what I did in Openreview For PaperQA. Use Gemini 2.5 Pro to extract projects using a structured output like

"a_reasoning": content.Schema(

type=content.Type.STRING,

),

"project": content.Schema(

type=content.Type.OBJECT,

enum=[],

required=[

"high_school",

"no_coding_experience",

"entrepreneur",

"undergraduate",

"phd_same_field",

"phd_other_field",

],

properties={

"high_school": content.Schema(

type=content.Type.OBJECT,

enum=[],

required=["project", "a_reasoning"],

properties={

"a_reasoning": content.Schema(

type=content.Type.STRING,

),

"project": content.Schema(

type=content.Type.STRING,

),

},

),I’m using a_reasoning so thinking traces are created for each project before the project is created, I noticed it helps with a project ideas.

Embed and deduplicate

This is an optional step, as I wanted to replicate a Proximity Agent from a recent paper from Google. I’ve created embedding using gemini-embedding-exp-03-07 and ran some comparison using cosine similarity, clustering and graph creation via NetworkX, but these clusters are mostly similar to pre-defined areas from openreview.

Rank projects via agentic Glicko Tournament

For a category undergraduate I put all projects into a 1v1 Swiss-system tournament. I’ve used gemini models again with a structured output to have a debate, improve an idea and find out what idea is better. I’ve also tried Gemma3:27b. I will put an example output for two papers here.

Next steps

Please share your thoughts! You can directly text me anywhere, for

How to improve projects

If projects are:

- Too simple or not important: add more (negative) examples to the prompt

- Already done by someone: add another agent doing literature search in the project generation step

- Too big or complex: split into smaller sub-projects in the

handholding guidestage

How to improve ranking

I think this is a difficult problem. Right now I’m relying mostly on LLM improvements, but if I can somehow create a dataset with a ranked ideas and their inspiration, we can RL an LLM on it. This dataset can be created for example by checking the most important citations in papers, so the dataset is {previous_paper: new_paper_title}

How to make this project more useful

I think it’s mostly sharing the project, involving more people and gathering feedback to improve. Hence this post :)

Links and inspirations

- Neel Nanda’s post about open problem

- AI scientist blog post from Google

- Curie AI agents for science

- Future House automatic scientific discovery

- Sakana’s AI scientist

- Research Town simulator

- CodeScientist by Ai2

Footnotes

{"debate":{"turn_1":"Expert A: Alright, let's review these proposals. Idea 1, based on the Sparse Autoencoder (SAE) paper, involves implementing mean feature dictionaries for a simplified task. It seems quite aligned with the source paper's core concepts but scales it down significantly. Focuses on interpretability techniques. Expert B: Idea 2 tackles dataset bias, replicating a key experiment from the 'Decade's Battle' paper using standard architectures like ResNet, and then extending it slightly by varying one parameter. This is more in the classic deep learning training/evaluation paradigm. Expert C: Initial thoughts: Idea 1 seems potentially more novel in *topic* for an undergrad, touching on cutting-edge interpretability, but might require more specific guidance on the theoretical underpinnings. Idea 2 feels more standard in *methodology* (train, test, analyze) which might be easier for an undergrad to grasp procedurally, but the novelty lies in the specific variable exploration.","turn2":"Expert C: Let's talk feasibility. Idea 1 requires understanding activation patching and logit differences, using a pre-trained small transformer. Finding or setting up a *suitably small* model and defining a truly simple task is key. The coding itself might not be overly complex for the core logic, but debugging the interpretability setup could be tricky. Access to pre-trained models like GPT-2 Small is feasible. Expert B: Idea 2's feasibility hinges on accessing the datasets (YFCC, CC, DataComp can be large) and compute resources for training ResNets, even smaller ones. Standard deep learning libraries simplify implementation, but dataset handling and managing training runs require infrastructure and time. The variable exploration part seems feasible if kept constrained (e.g., preprocessing variations vs. complex architectural changes). Expert A: Learning potential: Idea 1 offers deep dives into mechanistic interpretability concepts – a valuable, emerging area. Skills involve specific analysis techniques (patching, logit diff), Python, and potentially PyTorch/TensorFlow for model interaction. Idea 2 provides solid experience in the end-to-end deep learning workflow: data handling, model training, evaluation, hyperparameter sensitivity analysis – broadly applicable skills.","turn3":"Expert B: Novelty and scope: Idea 2's replication is standard, but the specific investigation (e.g., effect of specific object categories on dataset classification) could yield genuinely interesting, novel results within its narrow scope. The scope seems manageable if the variable chosen is well-defined. Expert A: Idea 1's novelty is high in terms of *applying* these specific interpretability methods (mean dictionaries, sufficiency) at an undergrad level. Even on a simplified task, it's less common than standard DL projects. The scope seems appropriate – implement two specific techniques from the paper. Testability is clear via the sufficiency metric. Expert C: Alignment: Both align well. Idea 1 directly implements simplified versions of techniques discussed (Sections 4.2, 4.3). Idea 2 replicates the core experiment and adds a standard sensitivity analysis, a natural extension. Both require careful reading of the source papers.","turn4":"Expert C: Considering the typical undergrad timeframe (1-2 semesters) and resources, Idea 1 might be slightly less demanding computationally and in terms of data management once the initial conceptual hurdle of interpretability techniques is overcome. The simplified task definition is crucial here. Expert B: However, the conceptual barrier for Idea 1 *could* be higher for students without prior exposure to transformer internals or interpretability. Idea 2 leverages more standard DL workflows, potentially making it easier to get started, even if compute/data requirements are larger. Expert A: I lean slightly towards Idea 1 for its unique learning opportunity in interpretability, assuming adequate mentorship is available. The technical implementation seems less resource-intensive than potentially large-scale training in Idea 2. The 'sufficiency' metric provides a clear quantitative goal. Expert B: I see the appeal of Idea 1's topic, but Idea 2 offers more transferable, standard DL skills. Perhaps it depends on the student's specific interests and the course context. Expert C: Let's weigh feasibility and learning potential. Idea 1 offers specialized learning, potentially higher conceptual difficulty, but lower resource needs. Idea 2 offers broader DL skills, potentially lower conceptual difficulty (for the replication part), but higher resource needs. Both are testable and reasonably scoped if managed well. Given the goal of providing a feasible *and* potentially novel experience, Idea 1 might have a slight edge due to the uniqueness of the interpretability angle for undergrads, provided the task simplification is done effectively.","turn5":null,"turn6":null,"turn7":null,"turn8":null,"turn9":null,"turn10":null},"improvements":{"idea_1":"* Clearly define the 'simplified task' upfront (e.g., specific logic gate simulation, string manipulation).\n* Provide starter code for loading the pre-trained model and accessing activations.\n* Suggest specific layers/heads to analyze based on prior work or preliminary probing.\n* Recommend using a very small model (e.g., distilled versions if available) to speed up iteration.\n* Add a qualitative analysis component: visualize some features or activation patterns.","idea_2":"* Specify *which* datasets to use and recommend using smaller subsets initially (e.g., 10k images per dataset).\n* Provide clear baseline code or point to a specific repository for the replication part.\n* Strongly constrain the 'single variable' exploration to ensure feasibility (e.g., focus *only* on data augmentation types, or *only* on training set size ratios).\n* Recommend specific compute resources or platforms (e.g., Google Colab Pro, university HPC access).\n* Encourage rigorous statistical testing of the results comparing different variable settings."},"judgment":{"explanation":"Both project ideas are derived well from their source papers and offer valuable learning experiences. Idea 1 focuses on cutting-edge interpretability techniques applied to a simplified problem, offering high novelty and specific skill development (activation patching, mechanistic analysis) with potentially lower computational demands but higher conceptual complexity initially. Idea 2 involves a more standard deep learning workflow (replication, sensitivity analysis) focused on dataset bias, offering broader skill development (training pipelines, data handling) but requiring significant data and compute resources. The panel leans towards Idea 1 primarily due to its higher novelty for an undergraduate project and potentially better feasibility regarding computational resources and data management, assuming the task simplification is effective and adequate mentorship is provided for the interpretability concepts. While Idea 2 builds valuable standard skills, its resource requirements and the potentially less novel core replication task make it slightly less ideal than the unique learning opportunity presented by Idea 1.","result":"idea_1"}}